Caching and local datasets#

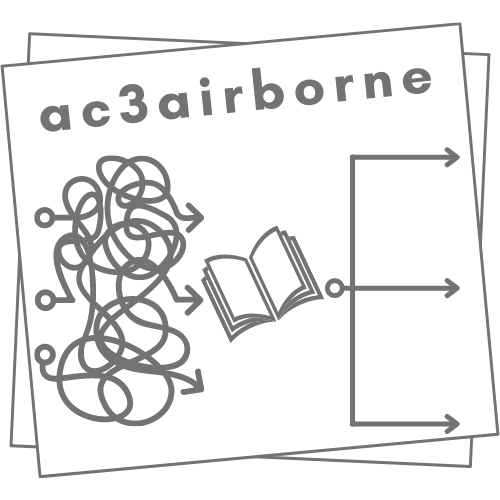

Datasets accessed via the intake catalog can be either downloaded into a temporary folder, from where they will be deleted after restarting python, or permanently into a specified directory. If the dataset is already contained within the specified directory, intake will load the data from the local source, instead of downloading it again from the remote server. This is recommended for large datasets or datasets which are used regularily.

The following example shows, how to supply local directories to intake using the simplecache functionality. Directories of many local datasets are suggested to be stored in a single .yaml file to avoid the specification of local directories within the python routines.

Loading the intake catalog#

We load the intake catalog from ac3airborne, which contains paths to the remote servers, where files are stored.

import ac3airborne

cat = ac3airborne.get_intake_catalog()

Additionally we lead the flight-phase-seperation, which contains information on every research flight.

meta = ac3airborne.get_flight_segments()

Example: Dropsonde data#

The caching functionality will be demonstrated with the dropsonde data published online on the PANGAEA data base. The file of the dataset on PANGAEA is contained in the intake catalog.

Option 1: Download into temporary folder#

The download into the temporary folder is the default behaviour. Usually the dataset is stored in the /tmp directory. We will download the first dropsonde of ACLOUD_P5_RF23. The parameter i_sonde describes the dropsonde number during the research flight.

ds_dsd = cat['ACLOUD']['P5']['DROPSONDES']['ACLOUD_P5_RF23'](i_sonde=1).to_dask()

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

By default, the variable name is not readable. By setting the parameter same_names of the simplecache group to True and supplying it to the storage_options parameter, the downloaded file has the same file name as the remote file (i.e. the file on PANGAEA).

kwds = {'simplecache': dict(

same_names=True

)}

ds_dsd = cat['ACLOUD']['P5']['DROPSONDES']['ACLOUD_P5_RF23'](i_sonde=1, storage_options=kwds).to_dask()

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

Option 2: Permanent download into local directory#

Under the storage_options parameter, we can also specify the local directory of the dataset. The path will be supplied to the same_names parameter of the simplecache group as shown below. If the remote file is contained in the local directory, the local file will be read. Else, the remote file will be downloaded and stored at the specified location permanently. The next time, the data is imported, intake will use the local file.

Here, we will store the data relative to the current working directory at ./data/dropsondes.

kwds = {'simplecache': dict(

cache_storage='./data/dropsondes',

same_names=True

)}

ds_dsd = cat['ACLOUD']['P5']['DROPSONDES']['ACLOUD_P5_RF23'](i_sonde=1, storage_options=kwds).to_dask()

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

Managing directories of multiple datasets#

Datasets may be stored locally in different directories. Instead of specifying the directory in every python script, we can use one file, where all paths are stored for each dataset. Here, we will use a yaml file, as it can be read easily into a python dictionary.

The file may be structured like this:

DROPSONDES: '/local/path/to/dropsondes'

BROADBAND_IRRADIANCE: '/local/path/to/broadband_irradiance'

FISH_EYE: '/local/path/to/fish_eye'

In the following, the data will be downloaded in or used from the local ./data folder of the current working directory.

import yaml

Now we read the pre-defined yaml file

with open('./local_datasets.yaml', 'r') as f:

local_dir = yaml.safe_load(f)

print(local_dir)

{'DROPSONDES': './data/dropsondes', 'BROADBAND_IRRADIANCE': './data/broadband_irradiance', 'FISH_EYE': './data/fish_eye'}

As a test, we will download the dropsonde data from ACLOUD RF05.

dataset = 'DROPSONDES'

flight_id = 'ACLOUD_P5_RF05'

We can access the directory, where the data is stored using the dataset name.

print(local_dir[dataset])

./data/dropsondes

We add the path now to the storage_options parameter.

kwds = {'simplecache': dict(

cache_storage=local_dir[dataset],

same_names=True

)}

Now we download or use the local dropsonde file. Afterwards, check if the directory ./data/dropsondes has been created and contains the file DS_ACLOUD_Flight_05_20170525_V2.nc. If the directory and the file already exist, the local file will be read.

ds = cat['ACLOUD']['P5'][dataset][flight_id](i_sonde=1, storage_options=kwds).to_dask()

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

Get data for offline usage#

The following example presents a way to download all the data of a certain instrument for all research flights for offline usage. At first, we get all the flights, for which data of the instrument is available.

dataset = 'DROPSONDES'

flight_ids = []

for campaign in ['ACLOUD', 'AFLUX', 'MOSAiC-ACA']:

flight_ids.extend(list(cat[campaign]['P5']['MiRAC-A']))

print(flight_ids)

['ACLOUD_P5_RF04', 'ACLOUD_P5_RF05', 'ACLOUD_P5_RF06', 'ACLOUD_P5_RF07', 'ACLOUD_P5_RF08', 'ACLOUD_P5_RF10', 'ACLOUD_P5_RF11', 'ACLOUD_P5_RF14', 'ACLOUD_P5_RF15', 'ACLOUD_P5_RF16', 'ACLOUD_P5_RF17', 'ACLOUD_P5_RF18', 'ACLOUD_P5_RF19', 'ACLOUD_P5_RF20', 'ACLOUD_P5_RF21', 'ACLOUD_P5_RF22', 'ACLOUD_P5_RF23', 'ACLOUD_P5_RF25', 'AFLUX_P5_RF03', 'AFLUX_P5_RF04', 'AFLUX_P5_RF05', 'AFLUX_P5_RF06', 'AFLUX_P5_RF07', 'AFLUX_P5_RF08', 'AFLUX_P5_RF09', 'AFLUX_P5_RF10', 'AFLUX_P5_RF11', 'AFLUX_P5_RF12', 'AFLUX_P5_RF13', 'AFLUX_P5_RF14', 'AFLUX_P5_RF15', 'MOSAiC-ACA_P5_RF05', 'MOSAiC-ACA_P5_RF06', 'MOSAiC-ACA_P5_RF07', 'MOSAiC-ACA_P5_RF08', 'MOSAiC-ACA_P5_RF09', 'MOSAiC-ACA_P5_RF10', 'MOSAiC-ACA_P5_RF11']

Now we simply loop over all the flights. In the case of the dropsondes, we set the i_sonde parameter to 1, since the dropsondes of the flights are contained all in the same file. This file will be downloaded, if it is not already contained in the directories. Dropsonde datasets are stored in seperate folders for every campaign. The paths, to where the data is downloaded is written into an empty yaml file.

list(cat['ACLOUD']['P5'][dataset])

['ACLOUD_P5_RF05',

'ACLOUD_P5_RF06',

'ACLOUD_P5_RF07',

'ACLOUD_P5_RF10',

'ACLOUD_P5_RF11',

'ACLOUD_P5_RF13',

'ACLOUD_P5_RF14',

'ACLOUD_P5_RF16',

'ACLOUD_P5_RF17',

'ACLOUD_P5_RF18',

'ACLOUD_P5_RF19',

'ACLOUD_P5_RF20',

'ACLOUD_P5_RF21',

'ACLOUD_P5_RF22',

'ACLOUD_P5_RF23']

dct = {dataset: {}}

for flight_id in list(cat['ACLOUD']['P5'][dataset]):

# get mission from flight_id segmentation

mission = flight_id.split('_')[0]

# define the path, to where data should be downloaded

path = f'./data/{dataset.lower()}/{mission.lower()}'

# store the path in a dictionary

dct[dataset].update({mission: path})

# define the parameters for caching

kwds = {'simplecache': dict(

cache_storage=path,

same_names=True

)}

# read the data to store it

cat['ACLOUD']['P5'][dataset][flight_id](i_sonde=1, storage_options=kwds).to_dask()

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

/net/sever/mech/miniconda3/envs/howtoac3/lib/python3.11/site-packages/intake_xarray/base.py:21: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

'dims': dict(self._ds.dims),

# keep track of paths, where data is downloaded to

with open('./local_datasets_2.yaml', 'w') as f:

yaml.dump(dct, f)